Researchers have produced a new memory device that could cut down on AI energy consumption by at least a thousand times.

This new device is known as computational random-access memory (CRAM). It works by performing calculations directly within memory cells. This method eliminates the need to transfer data across various parts of a computer.

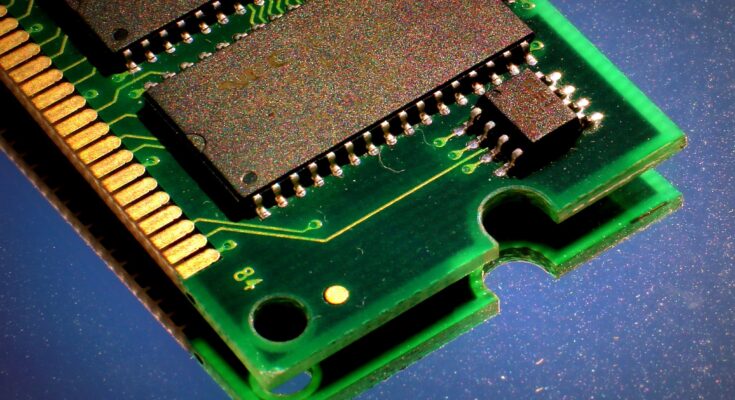

In traditional computing, data frequently travels between the processor, where data is handled, and the memory, where data is stored. In most computers, this memory is called RAM. This constant movement uses up a lot of energy, especially in AI applications.

AI tasks involve complicated calculations and large amounts of data, making the process even more energy-consuming, according to Live Science.

The International Energy Agency reports that the global energy use for AI might double from 460 terawatt-hours (TWh) in 2022 to 1,000 TWh in 2026. This amount is similar to Japan’s total electricity consumption.

New memory device can perform AI tasks in 434 nanoseconds

In a study published on July 25th in the journal npj Unconventional Computing, researchers showed that CRAM could perform important AI tasks like scalar addition and matrix multiplication in just 434 nanoseconds. Only 0.47 microjoules of energy were utilized for this. This is about 2,500 times less energy than traditional memory systems that have separate parts for logic and memory.

The research took twenty years to develop and was funded by several organizations. These include the US Defense Advanced Research Projects Agency (DARPA), the National Institute of Standards and Technology, the National Science Foundation, and the tech company Cisco.

Jian-Ping Wang, a senior author of the study and a professor at the University of Minnesota, revealed that the idea of using memory cells for computing was initially considered “crazy.”

Wang explained that since 2003, a dedicated team of students and faculty from various fields worked on the project.

The team included experts in physics, materials science and engineering, computer science and engineering, and modeling and hardware creation. Over the years, the team proved that the technology is practical for real-world applications.

Traditional RAM vs New CRAM

Traditional RAM devices usually need four or five transistors to store one bit of data, either a 1 or a 0.

CRAM is more efficient because it uses “magnetic tunnel junctions” (MTJs). An MTJ is a tiny device that uses the spin of electrons to store data, unlike traditional memory, which relies on electrical charges. This makes CRAM faster, more energy-efficient, and more durable than regular memory chips like RAM.

Researchers stated that CRAM can adapt to various AI algorithms, making it a flexible and energy-efficient option for AI computing.